$ docker run 2021

David Quintanar Pérez

Consultor BI

Problems that arise when developing

Docker as a solution

That when distributing what you built, to who needs it, you will be able to do it quickly, simply and without worrying about pre-installing, because everything will be defined from the time when you started building.

When you specify the environment you need, you can replicate it in development, production or on whatever computer you want without extra effort. Ensuring that as long as you have Docker, it will run properly

«Docker was created in 2013, but if you still don’t know it, 2021 will be the year you start using it. StackOverflow now has it rated second among the platforms that developers love most and in first place as the one they want most.»

What is Docker? And how does it work?

Containers

Let’s take a closer look at what Docker is and how it works. If you have already had an initial encounter with this tool, you will have read or heard about the containers.

Starting with the fact that containers are not unique to Docker. There are Linux containers, which allow applications to be packaged and isolated to enable them to run in different environments. Docker was developed from LXN, but has deviated from it over time.

Images

And Docker takes it to the next level, making it easy to create and design containers with the aid of images.

Images can be seen as templates that contain a set of instructions in order, which are used to create a container and how this needs to be done.

Docker Hub

Docker Hub is now the world’s largest library and community for container images, where you can find images, obtain them, share what you create and manage them. You just need to create an account. Do not hesitate to go and explore it when you finish reading.

Example

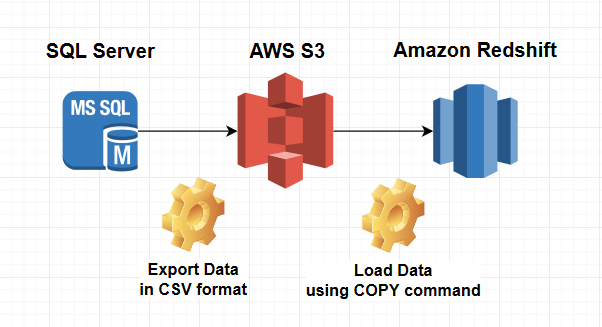

Now imagine you are developing a web application, you need an Apache HTTP service in its version 2.5 and a MongoDB service in its latest version.

You could set up a container for each service or application with the help of predefined images you got from Docker Hub and they can communicate with each other with the aid of Docker networks.

Using MongoDB, but with its stored database information coming from your preferred provider’s cloud service. This can be replicated in the development and production environment in the same way, quickly and easily.

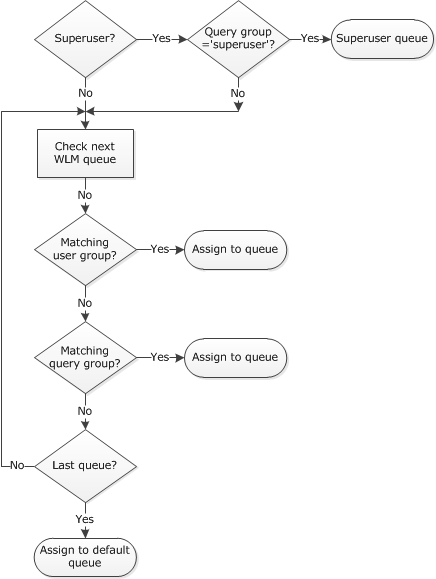

Containers versus Virtual Machines

One difference is that containers make the operating system virtual instead of hardware.

If we look at other aspects, as well as multiple virtual machines can run in a single one, containers can do the same, but containers take less time to start up.

And while each virtual machine includes a complete copy of an operating system, applications, etc., containers can share the same OS kernel, which can make them lighter. Container images are typically tens of MB in size, while virtual machines can take up tens of GB.

There are more things that I invite you to look out for, because this does not mean we stop using virtual machines or that Docker is better, just that we have another option.

Having containers running within virtual machines has become more complex and flexible

Download and install Docker

You can download and install Docker on multiple platforms (MAC, Windows and Linux) and you can consult the manual from the official website.

There are also several cloud service providers that let you use it.

Play with Docker

You also have the alternative of trying out Docker without installation with Play with Docker. As the name says, you can play with Docker by downloading images or repositories to run containers in Play with Docker instances. All at your fingertips with a Docker Hub account.

2021

Now you know more about the issues that exist in development, what Docker is and that it works as a solution, a little about its system of containers and images that you can create or get from Docker Hub. You understand some differences between Virtual Machines and Docker. That docker is multi-platform and you can experiment with it without installing it on your computer with Play with Docker.

Today more and more job offers are requesting Docker, including as a value added to the requirements needed to fill a job post. Remember that if you are in the world of software development, if you want to build, distribute and run code wherever, easily, solve your problems, experiment in new technologies, learn and understand the idea of the title in this article… You need to learn Docker.