Roger Pou Lopez

Data Scientist

A RAG, acronym for ‘Retrieval Augmented Generation,’ represents an innovative strategy within natural language processing. It integrates with Large Language Models (LLMs), such as those used by ChatGPT internally (GPT-3.5-turbo or GPT-4), with the aim of enhancing response quality and reducing certain undesired behaviors, such as hallucinations.

These systems combine the concepts of vectorization and semantic search, along with LLMs, to augment their knowledge with external information that was not included during their training phase and thus remains unknown to them.

There are certain points in favor of using RAGs:

- They allow for reducing the level of hallucinations exhibited by the models. Often, LLMs respond with incorrect (or invented) information, although semantically their response makes sense. This is referred to as hallucination. One of the main objectives of RAG is to try to minimize these types of situations as much as possible, especially when asking about specific things. This is highly useful if one wants to use an LLM productively.

- Using a RAG, it is no longer necessary to retrain the LLM. This process can become economically costly, as it would require GPUs for training, in addition to the complexity that training may entail.

- They are economical, fast (utilizing indexed information), and furthermore, they do not depend on the model being used (at any time, we can switch from GPT-3.5 to Llama-2-70B).

Drawbacks:

- Assistance with code, mathematics, and it won’t be as straightforward as launching a simple modified prompt will be required.

- In the evaluation of RAGs (we will see later in the article), we will need powerful models like GPT-4.

Example Use Case

There are several examples where RAGs are being utilized. The most typical example is their use with chatbots to inquire about very specific business information.

- In call centers, agents are starting to use a chatbot with information about rates to respond quickly and effectively to the calls they receive.

- In chatbots, as sales assistants where they are gaining popularity. Here, RAGs help respond to product comparisons or when specifically asked about a service, making recommendations for similar products.

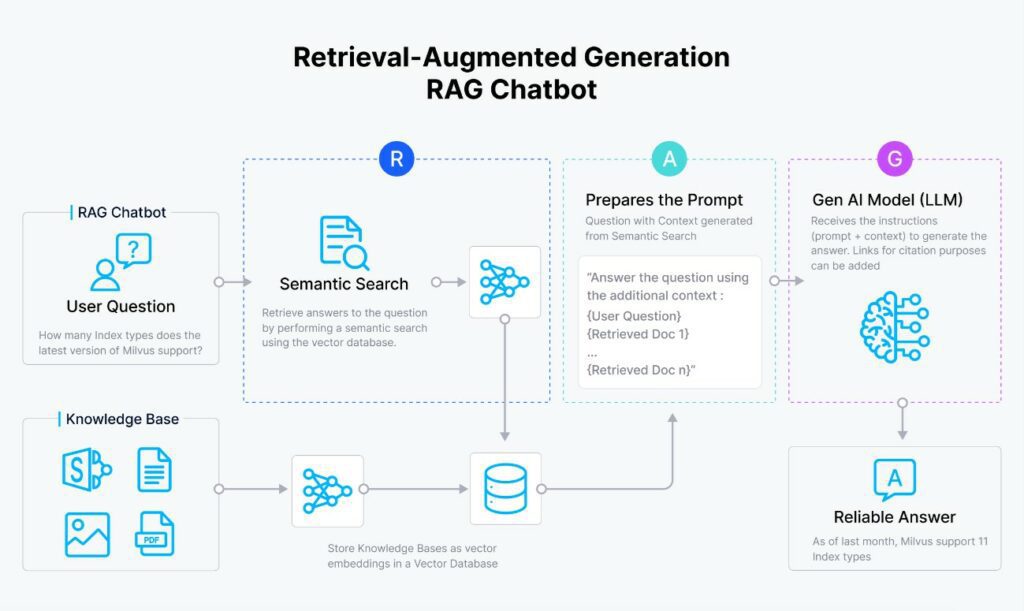

Components of a RAG

Let’s discuss in detail the different components that make up a RAG to have a rough idea, and then we’ll talk about how these elements interact with each other.

Knowledge Base

This element is a somewhat open but also logical concept: it refers to objective knowledge of which we know that the LLM is not aware and that has a high risk of hallucination. This knowledge, in text format, can come in many formats: PDF, Excel, Word, etc… Advanced RAGs are also capable of detecting knowledge in images and tables.

In general, all content will be in text format and will need to be indexed. Since human texts are often unstructured, we resort to subdividing the texts using strategies called chunking.

Embedding Model

An embedding is the vector representation generated by a neural network trained on a dataset (text, images, sound, etc.) that is capable of summarizing the information of an object of that same type into a vector within a specific vector space.

For example, in the case of a text referring to ‘I like blue rubber ducks’ and another that says ‘I love yellow rubber ducks,’ when converted into vectors, they will be closer in distance to each other than a text referring to ‘The cars of the future are electric cars.’

This component is what will subsequently allow us to index the different chunks of text information correctly.

Vector Database

This is the place where we are going to store and index the vector information of the chunks through the embeddings. It is a very important and complex component where, fortunately, there are already several open-source solutions that are very valid to deploy it ‘easily,’ such as Milvus or Chroma.

LLM

It is logical, since the RAG is a solution that allows us to help respond more accurately to these LLMs. We don’t have to restrict ourselves to very large and efficient models (but not economical like GPT-4), but they can be smaller and more ‘simple’ models in terms of response quality and number of parameters.

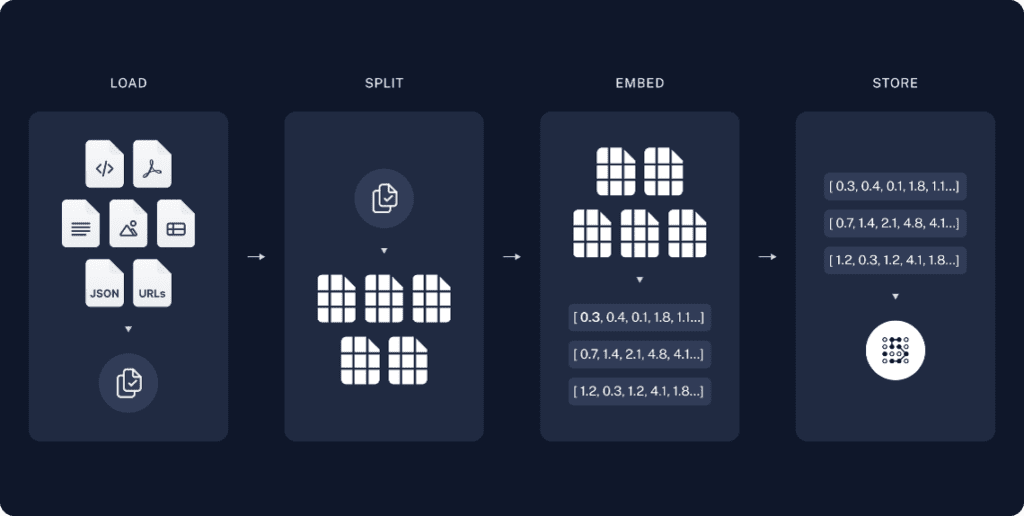

Below we can see a representative image of the process of loading information into the vector database.

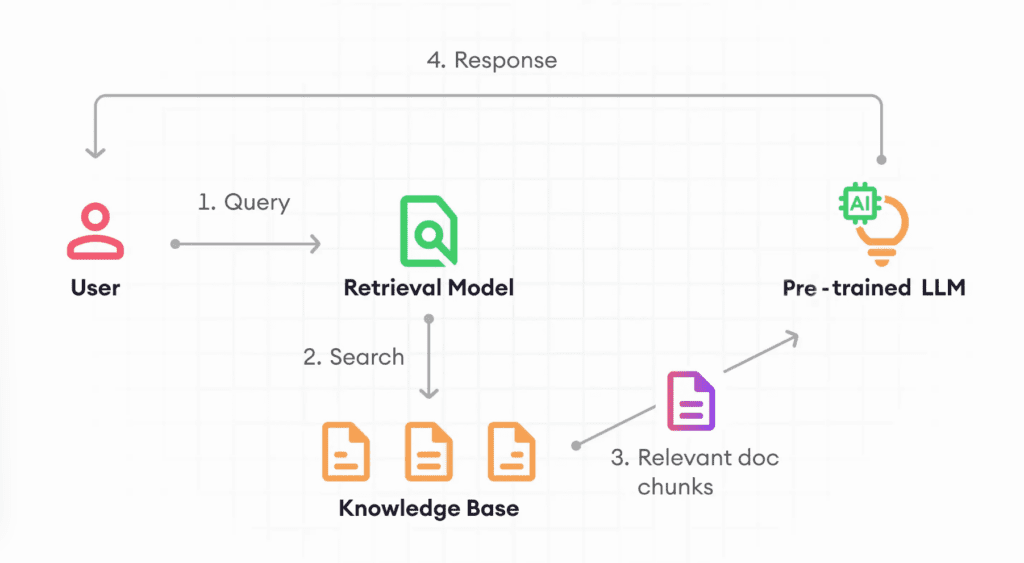

High-Level Operation

Now that we have a clearer understanding of the puzzle pieces, some questions arise:

- How do these components interact with each other?

- Why is a vector database necessary?

Let’s try to clarify the matter a bit.

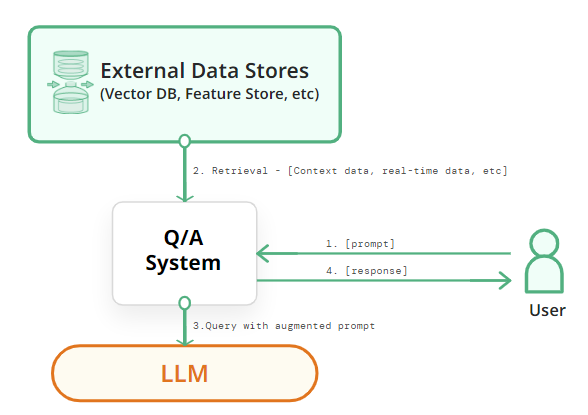

The intuitive idea of how a RAG works is as follows:

- The user asks a question. We transform the question into a vector using the same embedding system we used to store the chunks. This allows us to compare our question with all the information we have indexed in our vector database.

- We calculate the distances between the question and all the vectors we have in the database. Using a strategy, we select some of the chunks and add all this information within the prompt as context. The simplest strategy is to select a number (K) of vectors closest to the question.

- We pass it to the LLM to generate the response based on the contexts. That is, the prompt contains instructions + question + context returned by the Retrieval system. This is why the ‘Augmentation’ part in the RAG acronym, as we are doing prompt augmentation.

- The LLM generates a response based on the question we ask and the context we have passed. This will be the response that the user will see.

This is why we need an embedding and a vector database. That’s where the trick lies. If you are able to find very similar information to your question in your vector database, then you can detect content that may be useful for your question. But for all this, we need an element that allows us to compare texts objectively, and we cannot have this information stored in an unstructured way if we need to ask questions frequently.

Also, ultimately all this ends up in the prompt, which allows it to be independent of the LLM model we are going to use.

Evaluation of RAGs

In the same way as classical statistical or data science models, we have a need to quantify how a model is performing before using it productively.

The most basic strategy (for example, to measure the effectiveness of a linear regression) involves dividing the dataset into different parts such as train and test (80 and 20% respectively), training the model on train and evaluating on test with metrics like root-mean-square error, since the test set contains data that the model hasn’t seen. However, a RAG does not involve training but rather a system composed of different elements where one of its parts is using a text generation model.

Beyond this, here we don’t have quantitative data (i.e., numbers) and the nature of the data consists of generated text that can vary depending on the question asked, the context detected by the Retrieval system, and even the non-deterministic behavior of neural network models.

One basic strategy we can think of is to manually analyze how well our system is performing, based on asking questions and observing how the responses and contexts returned are working. But this approach becomes impractical when we want to evaluate all the possibilities of questions in very large documents and recurrently.

So, how can we do this evaluation?

The trick: Leveraging the LLMs themselves. With them, we can build a synthetic dataset that simulates the same action of asking questions to our system, just as if a human had done it. We can even add a higher level of sophistication: using a smarter model than the previous one that functions as a critic, indicating whether what is happening makes sense or not.

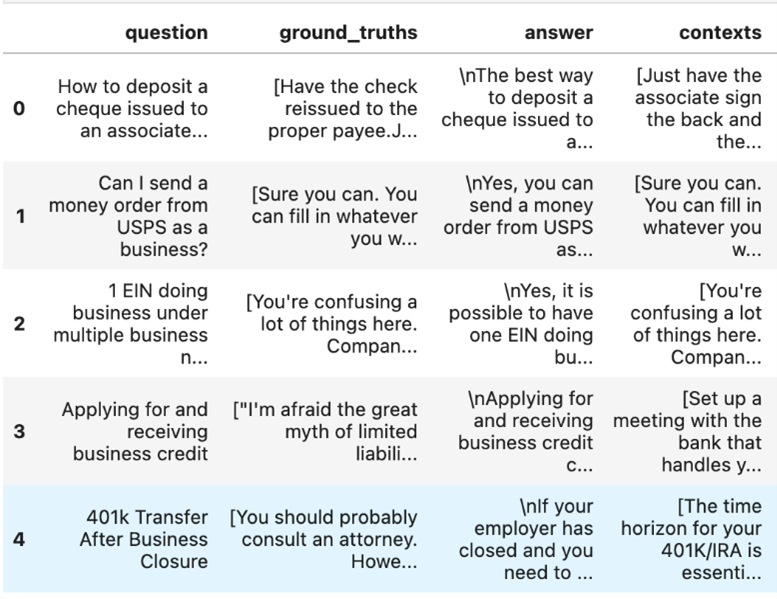

Example of Evaluation Dataset

What we have here are samples of Question-Answer pairs showing how our RAG system would have performed, simulating the questions a human might ask in comparison to the model we are evaluating. To do this, we need two models: the LLM we would use in our RAG, for example, GPT-3.5-turbo (Answer), and another model with better performance to generate a ‘truth’ (Ground Truth), such as GPT-4.

In other words, in ChatGPT 3.5 would be the question generation system, and ChatGPT 4 would serve as the critical part.

Once we have generated our evaluation dataset, the next step is to quantify it numerically using some form of metric.

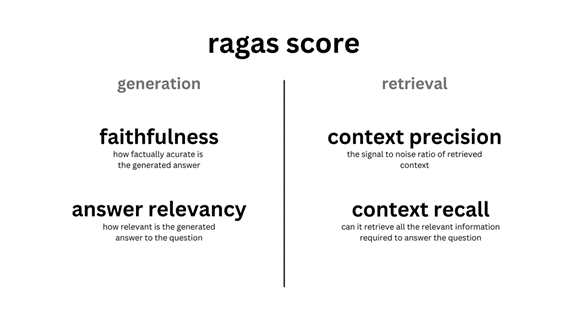

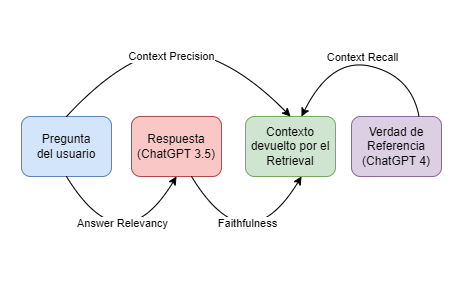

Evaluation Metrics

The evaluation of responses is something new, but there are already open-source projects that effectively quantify the quality of RAGs. These evaluation systems allow measuring the ‘Retrieval’ and ‘Generation’ parts separately.

Faitfulness Score

It measures the accuracy of our responses given a context. That is, what percentage of the question is true based on the context obtained through our system. This metric serves to try to control the hallucinations that LLMs may have. A very low value in this metric would imply that the model is making things up, even when given a context. Therefore, it is a metric that should be as close to one as possible.

Answer Relevancy Score

It quantifies the relevance of the response based on the question asked to our system. If the response is not relevant to what we asked, it is not answering us properly. Therefore, the higher this metric is, the better.

Context Precision Score

It evaluates whether all the elements of our ground-truth items within the contexts are ranked in priority or not.

Context Recall Score

It quantifies if the returned context aligns with the annotated response. In other words, how relevant the context is to the question we ask. A low value would indicate that the returned context is not very relevant and does not help us answer the question.

How all these metrics are being evaluated is a bit more complex, but we can find well-explained examples in the RAGAS documentation.

Practical Example using LangChain, OpenAI, and ChromaDB

We are going to use the LangChain framework, which allows us to build a RAG very easily.

The dataset we will use is an essay by Paul Graham, a typical and small dataset in terms of size.

The vector database we will use is Chroma, open-source and fully integrated with LangChain. Its use will be completely transparent, using the default parameters.

NOTE: Each call to an associated model incurs a monetary cost, so it’s advisable to review the pricing of OpenAI. We will be working with a small dataset of 10 questions, but if scaled, the cost could increase.

import os

from dotenv import load_dotenv

load_dotenv() # Configurar OpenAI API Key

from langchain_community.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import Chroma

from langchain.prompts import ChatPromptTemplate

embeddings = OpenAIEmbeddings(

model="text-embedding-ada-002"

)

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 700,

chunk_overlap = 50

)

loader = TextLoader('paul_graham/paul_graham_essay.txt')

text = loader.load()

documents = text_splitter.split_documents(text)

print(f'Número de chunks generados gracias al documento: {len(documents)}')

vector_store = Chroma.from_documents(documents, embeddings)

retriever = vector_store.as_retriever() Número de chunks generados gracias al documento: 158

Since the text of the book is in English, our prompt template must be in English.

from langchain.prompts import ChatPromptTemplate

template = """Answer the question based only on the following context. If you cannot answer the question with the context, please respond with 'I don't know':

Context:

{context}

Question:

{question}

"""

prompt = ChatPromptTemplate.from_template(template) Now we are going to define our RAG using LCEL. The model we will use to respond to the questions of our RAG will be GPT-3.5-turbo. It’s important that the temperature parameter is set to 0 so that the model is not creative.

from operator import itemgetter

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

primary_qa_llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

retrieval_augmented_qa_chain = (

{"context": itemgetter("question") | retriever, "question": itemgetter("question")}

| RunnablePassthrough.assign(context=itemgetter("context"))

| {"response": prompt | primary_qa_llm, "context": itemgetter("context")}

) .. and now it is possible to start asking questions to our RAG system.

question = "What was doing the author before collegue? "

result = retrieval_augmented_qa_chain.invoke({"question" : question})

print(f' Answer the question based: {result["response"].content}') Answer the question based: The author was working on writing and programming before college.

We can also investigate which contexts have been returned by our retriever. As mentioned, the Retrieval strategy is the default and will return the top 4 contexts to answer a question.

display(retriever.get_relevant_documents(question))

display(retriever.get_relevant_documents(question))

[Document(page_content="What I Worked On\n\nFebruary 2021\n\nBefore college the two main things I worked on, outside of school, were writing and programming. I didn't write essays. I wrote what beginning writers were supposed to write then, and probably still are: short stories. My stories were awful. They had hardly any plot, just characters with strong feelings, which I imagined made them deep.", metadata={'source': 'paul_graham/paul_graham_essay.txt'}),

Document(page_content="Over the next several years I wrote lots of essays about all kinds of different topics. O'Reilly reprinted a collection of them as a book, called Hackers & Painters after one of the essays in it. I also worked on spam filters, and did some more painting. I used to have dinners for a group of friends every thursday night, which taught me how to cook for groups. And I bought another building in Cambridge, a former candy factory (and later, twas said, porn studio), to use as an office.", metadata={'source': 'paul_graham/paul_graham_essay.txt'}),

Document(page_content="In the print era, the channel for publishing essays had been vanishingly small. Except for a few officially anointed thinkers who went to the right parties in New York, the only people allowed to publish essays were specialists writing about their specialties. There were so many essays that had never been written, because there had been no way to publish them. Now they could be, and I was going to write them. [12]\n\nI've worked on several different things, but to the extent there was a turning point where I figured out what to work on, it was when I started publishing essays online. From then on I knew that whatever else I did, I'd always write essays too.", metadata={'source': 'paul_graham/paul_graham_essay.txt'}),

Document(page_content="Wow, I thought, there's an audience. If I write something and put it on the web, anyone can read it. That may seem obvious now, but it was surprising then. In the print era there was a narrow channel to readers, guarded by fierce monsters known as editors. The only way to get an audience for anything you wrote was to get it published as a book, or in a newspaper or magazine. Now anyone could publish anything.", metadata={'source': 'paul_graham/paul_graham_essay.txt'})] Evaluating our RAG

Now that we have our RAG set up thanks to LangChain, we still need to evaluate it.

It seems that both LangChain and LlamaIndex are beginning to have easy ways to evaluate RAGs without leaving the framework. However, for now, the best option is to use RAGAS, a library that we had mentioned earlier and is specifically designed for that purpose. Internally, it will use GPT-4 as the critical model, as we mentioned earlier.

from ragas.testset.generator import TestsetGenerator

from ragas.testset.evolutions import simple, reasoning, multi_context

text = loader.load()

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 1000,

chunk_overlap = 200

)

documents = text_splitter.split_documents(text)

generator = TestsetGenerator.with_openai()

testset = generator.generate_with_langchain_docs(

documents,

test_size=10,

distributions={simple: 0.5, reasoning: 0.25, multi_context: 0.25}

)

test_df = testset.to_pandas()

display(test_df) | question | contexts | ground_truth | evolution_type | episode_done | |

|---|---|---|---|---|---|

| 0 | What is the batch model and how does it relate… | [The most distinctive thing about YC is the ba… | The batch model is a method used by YC (Y Comb… | simple | True |

| 1 | How did the use of Scheme in the new version o… | [In the summer of 2006, Robert and I started w… | The use of Scheme in the new version of Arc co… | simple | True |

| 2 | How did learning Lisp expand the author’s conc… | [There weren’t any classes in AI at Cornell th… | Learning Lisp expanded the author’s concept of… | simple | True |

| 3 | How did Moore’s Law contribute to the downfall… | [[4] You can of course paint people like still… | Moore’s Law contributed to the downfall of com… | simple | True |

| 4 | Why did the creators of Viaweb choose to make … | [There were a lot of startups making ecommerce… | The creators of Viaweb chose to make their eco… | simple | True |

| 5 | During the author’s first year of grad school … | [I applied to 3 grad schools: MIT and Yale, wh… | reasoning | True | |

| 6 | What suggestion from a grad student led to the… | [McCarthy didn’t realize this Lisp could even … | reasoning | True | |

| 7 | What makes paintings more realistic than photos? | [life interesting is that it’s been through a … | By subtly emphasizing visual cues, paintings c… | multi_context | True |

| 8 | “What led Jessica to compile a book of intervi… | [Jessica was in charge of marketing at a Bosto… | Jessica’s realization of the differences betwe… | multi_context | True |

| 9 | Why did the founders of Viaweb set their price… | [There were a lot of startups making ecommerce… | The founders of Viaweb set their prices low fo… | simple | True |

test_questions = test_df["question"].values.tolist()

test_groundtruths = test_df["ground_truth"].values.tolist()

answers = []

contexts = []

for question in test_questions:

response = retrieval_augmented_qa_chain.invoke({"question" : question})

answers.append(response["response"].content)

contexts.append([context.page_content for context in response["context"]])

from datasets import Dataset # HuggingFace

response_dataset = Dataset.from_dict({

"question" : test_questions,

"answer" : answers,

"contexts" : contexts,

"ground_truth" : test_groundtruths

})

from ragas import evaluate

from ragas.metrics import (

faithfulness,

answer_relevancy,

context_recall,

context_precision,

)

metrics = [

faithfulness,

answer_relevancy,

context_recall,

context_precision,

]

results = evaluate(response_dataset, metrics)

results_df = results.to_pandas().dropna() | question | answer | contexts | ground_truth | faithfulness | answer_relevancy | context_recall | context_precision | |

|---|---|---|---|---|---|---|---|---|

| 0 | What is the batch model and how does it relate… | The batch model is a system where YC funds a g… | [The most distinctive thing about YC is the ba… | The batch model is a method used by YC (Y Comb… | 0.750000 | 0.913156 | 1.0 | 1.000000 |

| 1 | How did the use of Scheme in the new version o… | The use of Scheme in the new version of Arc co… | [In the summer of 2006, Robert and I started w… | The use of Scheme in the new version of Arc co… | 1.000000 | 0.910643 | 1.0 | 1.000000 |

| 2 | How did learning Lisp expand the author’s conc… | Learning Lisp expanded the author’s concept of… | [So I looked around to see what I could salvag… | Learning Lisp expanded the author’s concept of… | 1.000000 | 0.924637 | 1.0 | 1.000000 |

| 3 | How did Moore’s Law contribute to the downfall… | Moore’s Law contributed to the downfall of com… | [[5] Interleaf was one of many companies that … | Moore’s Law contributed to the downfall of com… | 1.000000 | 0.940682 | 1.0 | 1.000000 |

| 4 | Why did the creators of Viaweb choose to make … | The creators of Viaweb chose to make their eco… | [There were a lot of startups making ecommerce… | The creators of Viaweb chose to make their eco… | 0.666667 | 0.960447 | 1.0 | 0.833333 |

| 5 | What suggestion from a grad student led to the… | The suggestion from grad student Steve Russell… | [McCarthy didn’t realize this Lisp could even … | The suggestion from a grad student, Steve Russ… | 1.000000 | 0.931730 | 1.0 | 0.916667 |

| 6 | What makes paintings more realistic than photos? | By subtly emphasizing visual cues such as the … | [copy pixel by pixel from what you’re seeing. … | By subtly emphasizing visual cues, paintings c… | 1.000000 | 0.963414 | 1.0 | 1.000000 |

| 7 | “What led Jessica to compile a book of intervi… | Jessica was surprised by how different reality… | [Jessica was in charge of marketing at a Bosto… | Jessica’s realization of the differences betwe… | 1.000000 | 0.954422 | 1.0 | 1.000000 |

| 8 | Why did the founders of Viaweb set their price… | The founders of Viaweb set their prices low fo… | [There were a lot of startups making ecommerce… | The founders of Viaweb set their prices low fo… | 1.000000 | 1.000000 | 1.0 | 1.000000 |

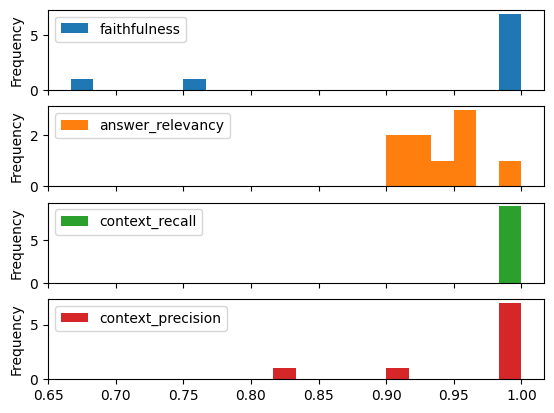

We visualize the statistical distributions that emerge.

results_df.plot.hist(subplots=True,bins=20)

We can observe that the system is not perfect even though we have generated only 10 questions (more would be needed) and it can also be seen that in one of them, the RAG pipeline has failed to create the ground truth.

Nevertheless, we could draw some conclusions:

- Sometimes it is not able to provide very faithful responses.

- The relevance of the response varies but consistently good.

- The context recall is perfect but the context precision is not as good.

Now, here we can consider trying different elements:

- Changing the embedding used to one that we can find in the HuggingFace MTEB Leaderboard.

- Improving the retrieval system with different strategies than the default.

- Evaluating with other LLMs.

With these possibilities, it is feasible to analyze each of these previous strategies and choose the one that best fits our data or monetary criteria.

Conclusions

In this article, we have seen what a RAG consists of and how we can evaluate a complete workflow. This subject matter is currently booming as it is one of the most effective and cost-effective alternatives to avoid fine-tuning LLMs.

It is possible that new metrics, new frameworks, will make the evaluation of these simpler and more effective, but in the next articles, we will not only be able to see their evolution but also how to bring a RAG-based architecture into production.